Import user profiles

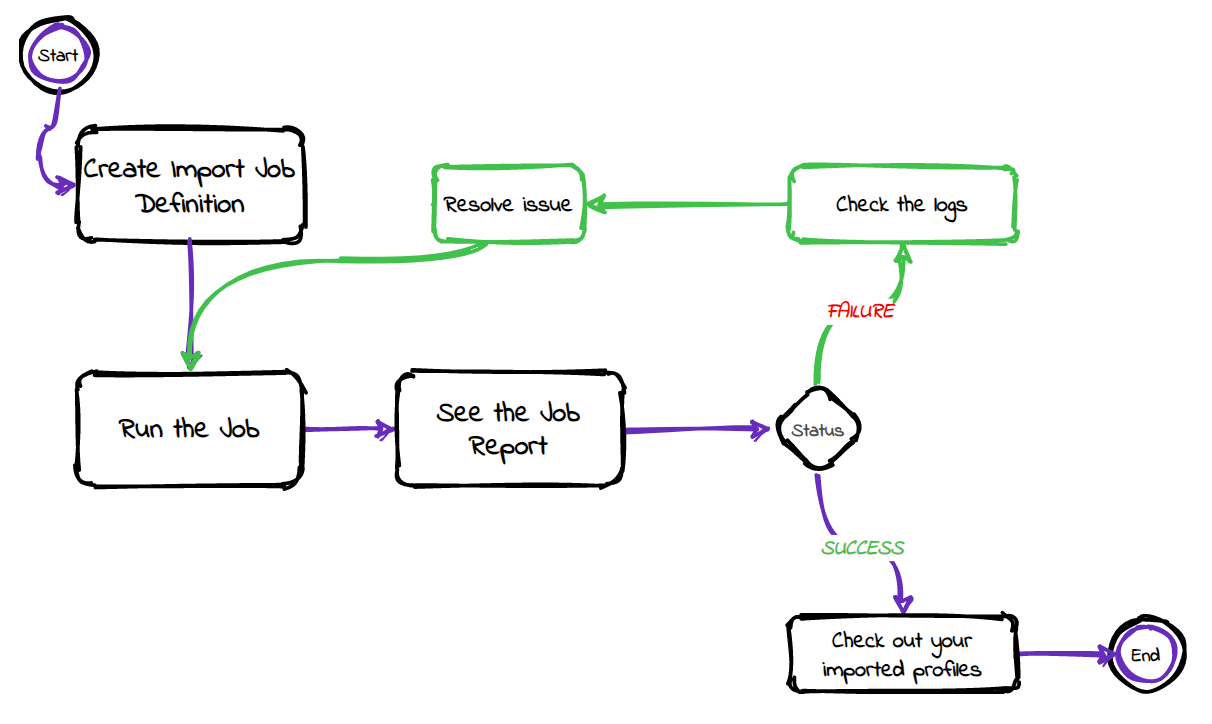

You can import user profiles from the ReachFive Console. The instructions below guide you step-by-step in importing your users. The visual below shows you a high-level flow of the process.

Import users from the console

Follow the instructions below to import user profiles from the ReachFive Console.

| Not all User Profile Object fields can be imported. See the table on the User Profile data model page to see which profiles can be imported. |

Prerequisites

-

You must have access to the ReachFive Console.

-

You must have a Developer, Manager, or Administrator role.

-

You must have the Import Jobs feature enabled.

-

Unless you choose the Force data update from file option, the following applies to importing user profiles:

-

Updating existing user: The

updated_atvalue in the import file must be more recent than the existing profile. -

Importing new user: The

updated_atvalue is not mandatory.

-

Multi-threaded imports

Our import jobs are multi-threaded. This means that many profiles are imported simultaneously. Therefore, it’s important that you ensure all profiles are unique; otherwise, you may get duplicate profiles.

We strongly encourage you to check the following fields to ensure each profile is unique:

-

email -

phone_number(if the SMS feature is enabled) -

external_id -

custom_identifier

See the User Profile for more on these fields.

Managed vs Lite profiles

|

Instructions

The instructions below apply to both creating and editing an import job definition.

|

If editing an existing import job, be sure to select the |

-

Go to .

-

Select New definition.

-

Under General, give the import job a name and description. Don’t forget to Enable the job.

-

Under Source, choose the protocol you wish to use to import the file.

-

Specify the Server host for the secure FTP site.

-

Specify the Server port.

-

Under Authentication method, choose the authentication method type:

Username and password:

-

Enter the Username for the server.

-

Enter the Password for the server.

OpenSSH:

-

Enter the Username for the server.

-

Enter the OpenSSH private key.

example-----BEGIN ENCRYPTED PRIVATE KEY----- MIIBpjBABgkqhkiG9w0BBQ0wMzAbBgkqhkiG9w0BBQwwDgQI5yNCu9T5SnsCAggA MBQGCCqGSIb3DQMHBAhJISTgOAxtYwSCAWDXK/a1lxHIbRZHud1tfRMR4ROqkmr4 kVGAnfqTyGptZUt3ZtBgrYlFAaZ1z0wxnhmhn3KIbqebI4w0cIL/3tmQ6eBD1Ad1 nSEjUxZCuzTkimXQ88wZLzIS9KHc8GhINiUu5rKWbyvWA13Ykc0w65Ot5MSw3cQc w1LEDJjTculyDcRQgiRfKH5376qTzukileeTrNebNq+wbhY1kEPAHojercB7d10E +QcbjJX1Tb1Zangom1qH9t/pepmV0Hn4EMzDs6DS2SWTffTddTY4dQzvksmLkP+J i8hkFIZwUkWpT9/k7MeklgtTiy0lR/Jj9CxAIQVxP8alLWbIqwCNRApleSmqtitt Z+NdsuNeTm3iUaPGYSw237tjLyVE6pr0EJqLv7VUClvJvBnH2qhQEtWYB9gvE1dS BioGu40pXVfjiLqhEKVVVEoHpI32oMkojhCGJs8Oow4bAxkzQFCtuWB1 -----END ENCRYPTED PRIVATE KEY-----

-

-

Specify the Path where the import file is located.

- For example

-

<serverhost>/path-to-file/file.csv.

You may have to authorize ReachFive’s outgoing IPs to access your SFTP server. For more on this, see Console: Outgoing cluster IPs.

-

Specify the URL for the S3 bucket.

-

Specify the name Bucket.

-

Enter the Region for the server.

-

Enter the Access key for AWS.

-

Enter the Secret key for AWS.

-

Specify the Path where the import file is located.

- For example

-

<serverhost>/path-to-file/file.csv.

-

Specify the Project ID for the Google Cloud Storage.

-

Specify the App name.

-

Enter the User name for the server.

-

Specify the name Bucket.

-

Enter the Credentials in JSON format.

-

Specify the Path where the import file is located.

- For example

-

<serverhost>/path-to-file/file.csv.

-

-

If you are importing files with additional encryption:

-

Select Encrypt.

-

Enter your password that is used as part of the encryption.

-

Specify the number of PBKDF2 iterations you used.

-

-

Under Schedule, if desired, use a Quartz scheduler for scheduling the job.

-

Under File format, select the file format type you wish to import. This will be either JSONL or CSV.

Choose the Encoding standard for your JSONL file.

{ "external_id": "1", "email": "foo@gmail.com" } \n (1) { "email": "bar@gmail.com", "name": "Joe", "gender": "M", "enroll_email_as_mfa_credential": true, (2) "enroll_phone_as_mfa_credential": true, (2) "custom_fields": { (3) "has_loyalty_card": true }, "consents": { (4) "exampleConsent": { "date": "2021-11-23T11:42:40.858Z", "consent_version": { "language": "fr", "version_id": 1 }, "granted": true, "consent_type": "opt-in", "reporter": "managed" } }, "addresses": [ (5) { "id": 0, "default": true, "address_type": "billing", "street_address": "10 rue Chaptal", "address_complement": "4 étage", "locality": "Paris", "postal_code": "75009", "region": "Île-de-France", "country": "France", "recipient": "Matthieu Winoc", "phone_number": "0723538943", "custom_fields": { "custom_field_example": "custom_field_example_value", "custom_field_example_2": 42, } } ] }1 Each profile must separated by a line feed character ( \n).2 Enrolling email for MFA (only registers if identifier is verified). 3 Import custom_fieldsas an object containing a series of fields.4 Import consentsas an object or as a flattened field with the formatconsents.<consent>.<parameter>shown below.... "consents.cgu.date": "2021-09-03T19:08:01Z", "consents.cgu.granted": true, "consents.cgu.consent_version.version_id": 2, "consents.cgu.consent_version.language": "fr", "consents.cgu.consent_type": "opt-in", "consents.cgu.reporter": "managed" ...5 You can import addresses including custom address fields. When deleting profiles, only four fields are accepted:

-

id -

email -

phone_number(if the SMS feature is enabled) -

external_id

Only one of the four fields should be present and have a value per line. This ensures the uniqueness of the targeted profile. However, if multiple fields have values on the same line, only one is used to target a profile to delete, the other values are then discarded. The order of priority to choose the value is:

-

id -

email -

phone_number(if the SMS feature is enabled) -

external_id

For example: If both

idandemailare present, onlyidis used.If any additional field is present on a line, the line is omitted with a warning, but the other valid lines are processed. -

Choose the Encoding standard for your CSV file.

-

Enter your Delimiter. The default is

;. -

Enter your Quote char. The default is

". -

Enter your Escape character. The default is

\.

email,name,gender,custom_fields.has_loyalty_card,consents.newsletter.consent_type,consents.newsletter.granted,consents.newsletter.date,consents.newsletter.reporter,addresses.0.custom_fields.custom_field_example bar@gmail.com,Joe,M,true,opt-in,true,2018-05-25T15:41:09.671Z,managed,custom_field_example_valueWhen deleting profiles, only four columns are accepted:

-

id -

email -

phone_number(if the SMS feature is enabled) -

external_id

Only one of the four columns should contain a value per line. This ensures the uniqueness of the targeted profile. However, if multiple columns contain values, only one is used to target a profile to delete, the other values are then discarded. The order of priority to choose the value is:

-

id -

email -

phone_number(if the SMS feature is enabled) -

external_id

For example: If both

idandemailare present, onlyidis used.If any other column is in the file, the whole file is rejected. -

-

If desired, enable the End Job Notification Webhook.

For more information on the webhook, see the End Job Notification Webhook page. -

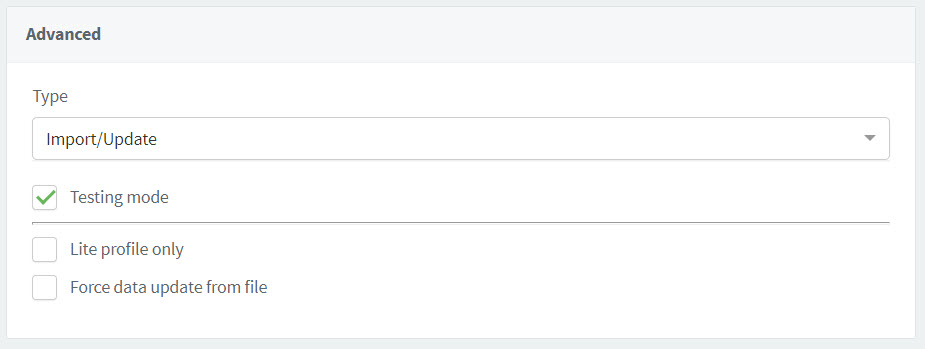

Under Advanced:

-

Select Import/Update for the Type.

For the Delete type, see Delete user profiles in bulk.

-

If desired, select Testing mode.

When you import profiles in Testing mode, ReachFive tests:

-

access to the server

-

access to the import file

-

whether the the import file content is valid

Testing mode does not handle database modifications. -

-

If importing Lite profiles only, select Lite profile only.

When you import Lite profiles only, you are not importing the fully managed profiles, but rather those lite profiles that registered with your site. You can import Lite profiles by

id,email,phone_number, orexternal_id. You have to import Lite profiles separately from managed profiles.- Separate import jobs

-

Whether you are importing new profiles or updating existing ones, managed profiles and lite profiles should be imported separately as different jobs. If you try to import a mixed group of managed and lite profiles, it could cause production issues.

- Created and updated fields

-

-

If

created_atis present in the import file, keep this value in the file with maximum tolerance of +10 minutes compared to the actual execution date. If the date exceeds tolerance, bring it back to the execution date +10 minutes. -

If

created_atis not present in the import file, value it with the execution date of the import. -

If

updated_atis present in the import file, keep this value in the file with maximum tolerance of +10 minutes compared to the actual execution date. If the date exceeds tolerance, bring it back to the execution date +10 minutes. -

If

updated_atis not present in the import file, value it with the execution date of the import.

-

-

If you want to force an update from file, select Force data update from file.

If there are fields with values in the current ReachFive profile that are not in the import, these fields retain their current value. However, all fields with values replace the existing fields in ReachFive.

-

Delete existing fields

If you want to delete an existing field, you need to pass a null value as part of the import file.

|

{

"updated_at": "2021-06-04T14:16:34.658Z",

"email": "bar@gmail.com",

"name": "Joe",

"family_name": null, (1)

"gender": "M"

}| 1 | Pass null to delete the existing family_name field. |

external_id,updated_at,email,name,family_name,gender

1,2021-06-04T14:16:34.658Z,foo@gmail.com,,,,,,bar@gmail.com,Joe,__null__,M (1)| 1 | Pass __null__ to delete the existing family_name field. |